The Crypto On Nvidia’s Balance Sheet

Posted August 29, 2024

Chris Campbell

The Pony Express was the mid-1800s’ version of instant messaging—riders shaved delivery times down from months to just 10 days.

Until, that is, the telegram came and rendered it obsolete.

Today, in an age of instant texts and emails, it all might seem a bit boring…

But, in terms of advancing communication, it was a huge deal.

And what could happen soon with AI is way bigger. In fact, there’s nothing really like it in history to compare it to.

On the scale of efficiency gains (10,000x+), it may as well be a leap to telepathy.

And, no surprise…

Nvidia is at the tip of the spear of this trend… Alongside a lesser-known crypto quietly sitting on Nvidia’s balance sheet.

Enter DisTrO, or what I’m calling…

Telepathic AI

To be clear, DisTrO is NOT the crypto on Nvidia's balance sheet. (More on that in a moment.)

DisTrO stands for "Distributed Training Over-the-Internet."

Put simply, it’s a new method designed to make training large AI models more efficient.

But HOW it does so is nothing short of magic.

Let’s start with the basics:

Large AI models are complex systems with billions or even trillions of parameters (like neurons in a brain). They need to be adjusted or “trained” to perform tasks.

(Tasks like responding to prompts, recognizing images, translating languages, etc.)

To train these models, you need A LOT of computing power. Often this is provided by GPUs - specialized processors for heavy computation - like NVIDIA provides.

Most of the time, one GPU isn’t enough. So multiple GPUs have to work together.

During training, each GPU works on a piece of the model and needs to share results (gradients) with the others.

This requires a lot of data to be sent back and forth, which is why these GPUs need to be connected by high-speed, specialized networks.

Also, the size of the data grows with the model size and number of GPUs. Therefore, for large models, the bandwidth requirements become astronomical.

This creates a HUGE barrier to entry. Typically, only large tech companies can afford to set up and run these systems.

DisTrO could change that.

Decentralized Training

This gets in the weeds a bit, but here’s the gist:

DisTrO employs a special optimizer (DisTrO-AdamW) designed to reduce the frequency and volume of data exchange.

Get this: it can reduce the data shared between GPUs by up to 10,000x or more. And WITHOUT degrading any quality or speed of the training process.

By the way, this is pretty breaking news. You’re probably one of the first to see it laid out in simple terms.

A report by Nous Research - an elite team of AI researchers - just revealed it’s possible this week.

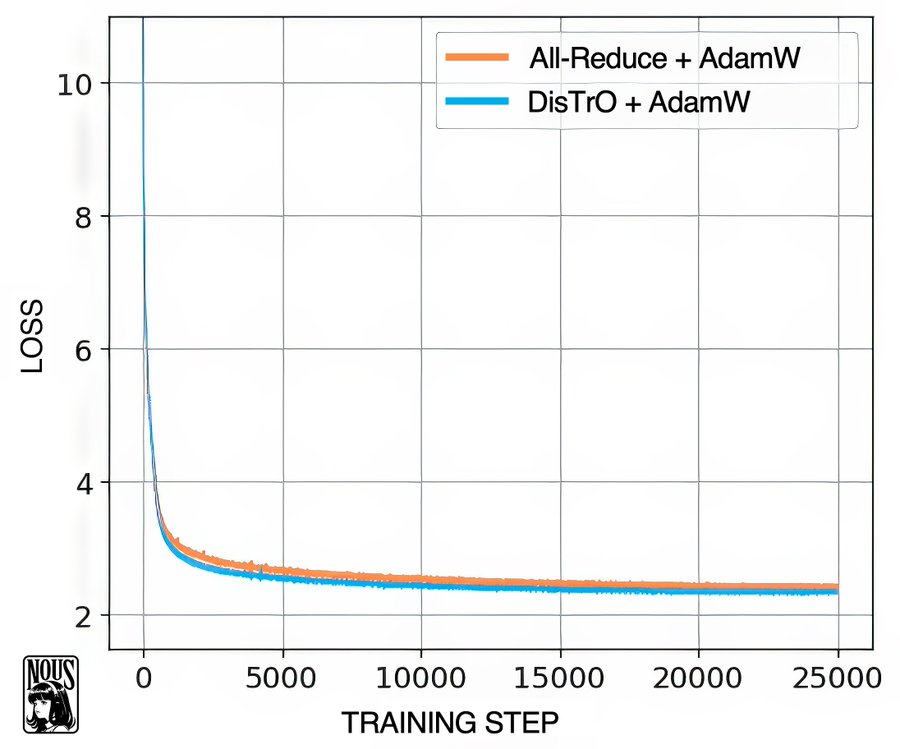

Check out this chart below from Nous.

All-Reduce + AdamW is the traditional communication method (All-Reduce) + traditional optimizer (AdamW).

Distro + AdamW replaces the traditional methods.

Obviously, this is a profound leap.

But the wider implications aren’t instantly obvious.

In short, AI training can shift from being location-dependent to being completely decentralized.

See, in traditional methods, the need for constant communication between GPUs requires them to be closely-knit.

Because so little data needs to be transferred between the GPUs…

With DisTrO, it’s technically feasible to train models even when GPUs are spread out geographically. Even when they’re connected over standard Internet rather than specialized networks.

Again, WITHOUT taking any more time or losing any of the quality.

Instead of huge data centers humming with thousands of interconnected GPUs…

Imagine a vast network of computers—from beefy gaming rigs to even modest laptops—all working together to train the next breakthrough AI model.

This Could Change Everything

DisTrO could change AI development by making it more accessible to a broader range of organizations.

Startups, academic institutions, and individual researchers could now participate in training large AI models without needing expensive infrastructure.

When no single company controls the training process, researchers and institutions gain the freedom to collaborate and explore new techniques and models.

Even in regions with less advanced infrastructure.

This boost in competition fuels innovation and drives forward more progress.

As costs decrease, smaller companies could compete with industry giants, potentially disrupting existing market dynamics and enabling new business models.

No less…

The impact of DisTrO might also extend to hardware and infrastructure. By reducing the need for high-bandwidth connections, DisTrO could influence the design of future GPUs and data centers.

Data centers might become more decentralized and modular.

There’s ONE crypto that will benefit the most from this trend. By no coincidence, it’s the only crypto that Nvidia currently holds on its balance sheet.

AND

It’s the ONLY crypto in our Paradigm Mastermind Group portfolio. (By the way, we beat Nvidia to the punch.)

Who else could benefit? If you guessed Nvidia, you’re on the right track.