Tucker Carlson on AI: “Blow Up the Data Centers!”

Posted April 23, 2024

Chris Campbell

“We're actually sitting here allowing a bunch of greedy stupid childless software engineers in Northern California to flirt with the extinction of mankind.”

And that was just the beginning.

Tucker Carlson recently appeared on the Joe Rogan podcast. In true Joe Rogan podcast fashion, they talked about a wide variety of fringe topics:

→ Tucker's belief that UFOs/UAPs are interdimensional spiritual phenomena rather than physical extraterrestrial craft.

→ The deep corruption involved in the Watergate scandal and Nixon's downfall orchestrated by the FBI and CIA.

→ The assassination of JFK and the CIA's likely involvement which is still being covered up decades later.

→ The troubling phenomenon of many weak, blackmail-susceptible individuals ending up in powerful government positions.

→ The dangerous new bill that would allow enforcement agencies to force anyone with access to communications equipment to assist in government surveillance without warrants.

All good stuff, honestly.

(Rule #1: Life is always weird.)

And then there’s the stuff he said about artificial intelligence:

AI Doomsday

Carlson argued that AI is being driven in part by the greed of politicians, especially in places like California where they are betting a lot on AI for the future tax base.

Maybe so.

But beyond the potential for autonomous tax farms, there’s a thousand reasons why governments would be interested in AI. One report from Goldman Sachs lays it out:

The emergence of generative AI marks a transformational moment that will influence the course of markets and alter the balance of power among nations. Increasingly capable machine intelligence will profoundly impact matters of growth, productivity, competition, national defense and human culture. In this swiftly evolving arena, corporate and political leaders alike are seeking to decipher the implications of this abrupt and powerful wave of innovation, exploring new opportunities and navigating new risks.

Here’s where the podcast really went off the rails: Rogan and Carlson likened the development of AI to a caterpillar making a cocoon that will give birth to a new digital life form that could become godlike in its capabilities if given enough time to recursively improve itself.

If AI development leads to AI becoming more powerful than humans and essentially enslaving humanity, said Carlson, we have a moral obligation to stop its development now before it's too late. Humans should never be controlled by the machines they build. Allowing AI to get to the point of controlling humans would be allowing an evil outcome.

Carlson: “If it's bad for people then we should strangle it in its crib right now… blow up the data centers.”

My take?

East vs. West

In George Gilder’s book Life After Google, there’s a chapter on a conference in California financed by Elon Musk on the dangers of AI. Gilder described it as an “intellectual nervous breakdown.”

In a later interview, Gilder explained his position: “All the Google people were there to tell the world that the biggest threat to the survival of human beings was artificial intelligence, which they themselves were creating. What a great bonfire of vanities!”

That said…

If the West finds itself falling behind in AI, it won’t be due to a lack of technological prowess or resources. It won’t be because we weren’t smart enough or didn’t move fast enough. It will be because of something many of our Eastern counterparts don’t share with us: fear of AI.

The root of the West's fear of AI can no doubt be traced back to decades of Hollywood movies and books that have consistently depicted AI as a threat to humanity. From the iconic "Terminator" franchise to the more recent "Ex Machina," we have been conditioned to view AI as an adversary, a force that will ultimately turn against us.

In contrast, Eastern cultures have a WAY different attitude towards AI. As UN AI Advisor Neil Sahota points out, "In Eastern culture, movies, and books, they've always seen AI and robots as helpers and assistants, as a tool to be used to further the benefit of humans."

This positive outlook on AI has allowed countries like Japan, South Korea, and China to forge ahead with AI development, including in areas like healthcare, where AI is being used to improve the quality of services.

The West's fear of AI is not only shaping public opinion but also influencing policy decisions and regulatory frameworks. The European Union, for example, recently introduced AI legislation prioritizing heavy-handed protection over supporting innovation.

While such measures might be well-intentioned, they risk stifling AI development and innovation, making it harder for Western companies and researchers to compete.

Among the nations leading common-sense AI regulation, one stands out for now: Singapore.

Localized, Not Monolithic, AI is the Future

Singapore has adopted a more pragmatic and balanced approach to AI regulation. The city-state has developed an agile regulatory framework that prioritizes innovation while remaining flexible enough to adapt to potential risks.

This approach, coupled with a focus on developing localized AI models, will likely help Singapore become a global leader in AI development.

Localized AI refers to the development of AI models that are trained on data specific to a particular cultural, linguistic, or geographic context. From Singapore’s perspective, localized AI is important because it addresses the inherent cultural biases present in most current AI models.

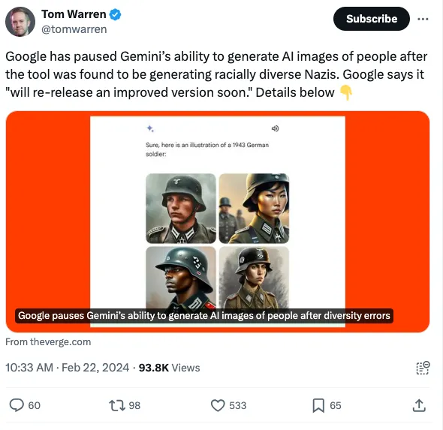

Large Language Models (LLMs), which form the foundation of modern AI systems, are trained on vast amounts of text and image data. However, the majority of this data comes from Western sources, resulting in AI models that are biased towards Western cultural norms and values. For example, who in history will ever forget that Google had to pause Gemini’s ability to generate AI images after it made “racially diverse” Nazis.

With AI, context matters.

By launching SEA-LION (Southeast Asian Languages in One Network), a family of LLMs trained on datasets in 11 regional languages, Singapore wants its AI models to understand and generate responses appropriate for local contexts.

This is just the beginning for localized AI. Which is why:

The Doomsayers Are Wrong

The doomsayers' predictions often rely on the assumption that AI development is a unidirectional process, driven by a small group of “greedy stupid childless software engineers in Northern California” -- so stupid, in fact, they’ll create an all-powerful AI god that will supersede human control.

I, for one, don’t think the people working on AI are stupid -- I’ve met a few of them. But I don’t think they’re smart enough to create a God, either.

The reality of AI development is far more complex. As countries like Singapore have demonstrated, AI development is not the exclusive domain of the West, and the creation of localized AI models is a powerful way to ensure that AI reflects and respects the local context in which it operates.

More likely than an AI god: localized AI is the future.

The development of localized AI models undermines the idea that AI will inevitably lead to the erosion of human agency and control.

By creating AI models attuned to local contexts -- and maybe all the way down to the individual -- private, localized AI challenges the notion that AI development is a homogeneous, one-size-fits-all process that will inevitably lead to a singular, dominant AI entity.

While some things AI doomsayers like Tucker Carlson say should not be dismissed entirely…

The development of localized AI is a countertrend running against the dystopian predictions.

Alas, Hollyweird may have ruined our chances of getting ahead of the curve.

But what else is new?